Rebecca Ann “Burger Becky” Heineman passed away last month, after a brief but intense battle with cancer. Her contributions to video game history are innumerable; if you’ve played a video game made within the past 30 years, chances are she had a hand in its creation in some way. After meeting her at GDC in March 2014, she quickly became one of my mentors. But more than that, I am proud to call her my friend, both of her and her late wife Jennell Jaquays.

Becky and I would chat about all sorts of things, ranging from industry gossip to life to pop culture to programming. I am sad that my friend is no longer available for late night messages or calls, but I’ll always have fond memories to look back upon, not to mention her valuable lessons and wisdom. Some of these I’d like to share in this post.

My “first encounter” with Becky was not with her directly but, as with most people, through her work, though I didn’t know it at the time.

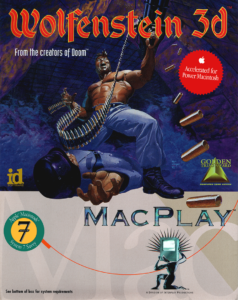

My dad—now retired—was a high school band director in 1994. That fall, one of his students gave him a floppy disk with the three-level shareware First Encounter of Wolfenstein 3D for Mac, and encouraged my dad to take it home and play it. Dad played it for about ten seconds and quickly determined it wasn’t for him, but knowing my penchant for gaming, he let me give it a try instead. My sister and I played through those three levels over and over through the rest of that year. I asked for—and got—the full ninety-level version for Christmas.

I had never seen a game like it before; its first-person perspective and animation (such as it was; my dad’s 16MHz Macintosh II could barely run it) blew me away and set my expectations for what a computer game could be. Yet at that time, young me thought that software creation was the work of teams of white-coated scientists in sterilized laboratories like I saw in cartoons; surely this game had to have been developed by a large company armed with legions of workers. After all, the game opened with logos for two very legitimate-looking studios: MacPlay, and id Software. Still, the seed was planted in my head as I slowly taught myself programming that maybe, someday, I could be good enough to work for a company making something as enthralling as Wolfenstein.

My first interaction with Becky would come several years later, in 2001.

By this time I knew who she was and her involvement with Wolfenstein 3D, among several other games by then. I was active on Scott Kevill’s GameRanger service, and United Developers—the trio of MacPlay / Mumbo Jumbo / Contraband Entertainment (Becky’s company at this time)—held a Q&A about the development of Aliens vs. Predator, a game that they had just completed and were about to ship. I asked the team a couple of questions, and Becky answered them both! I remember being taken aback not just that I was communicating with Real Game Developers, but also later after the session ended that I had been selected to receive a free copy of the game. Certainly this experience left a favorable impression not just of MacPlay, not just of Mac game development, but also of Becky.

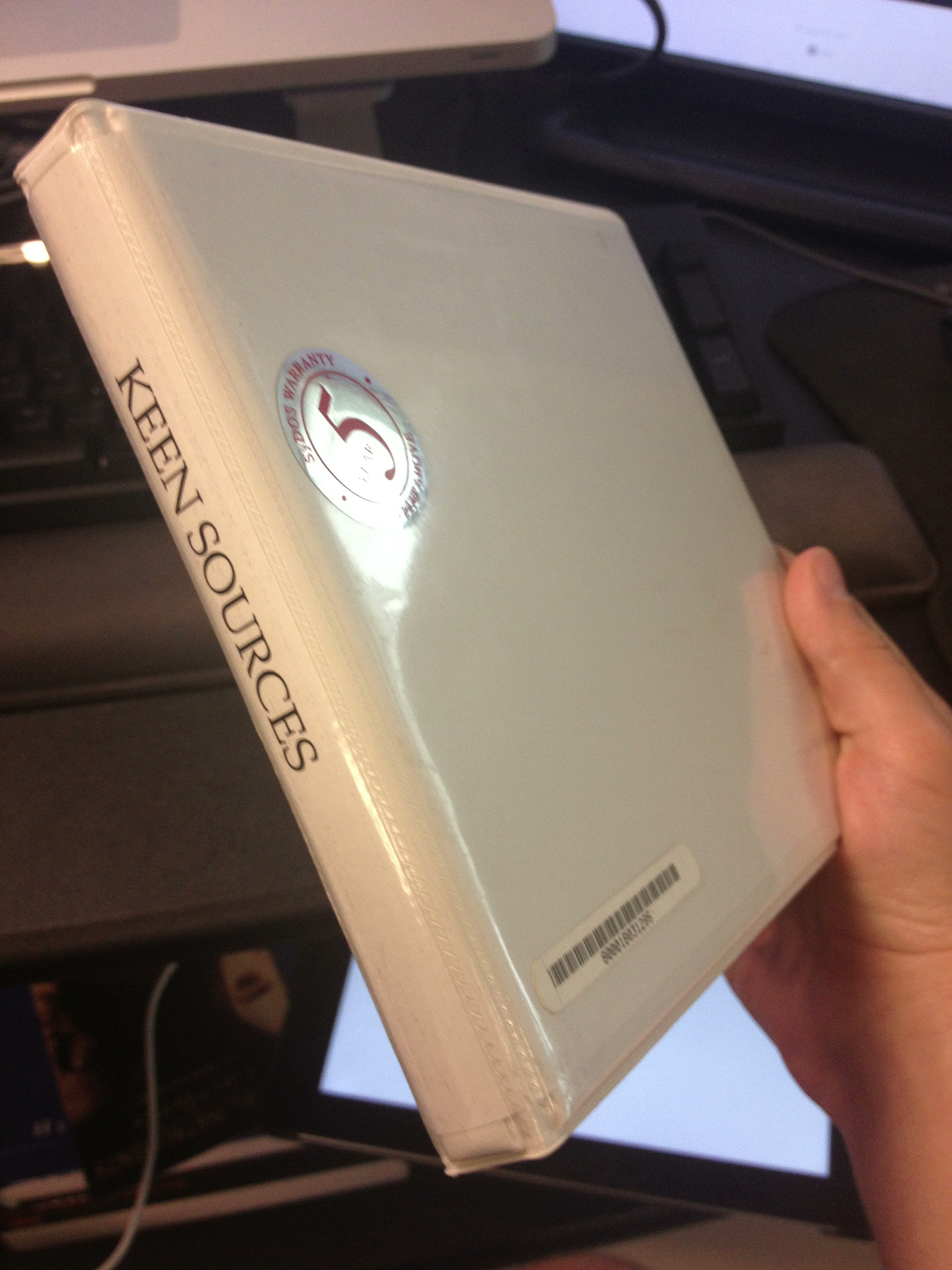

By 2013 I was working at PlayFirst, under the creative direction of Tom Hall (it all connects to Wolfenstein… 😀 ). Tom had entrusted me with his SyQuest cartridge containing the source code to Commander Keen 4-6, and word quickly spread once I had imaged the cartridge that the Keen source code had been found and preserved.

I was following Becky on social media by this time, but I received an unsolicited message from her on Facebook in September 2013. Seems she had seen the news about the Keen source code, and wanted to talk. 😀 I was more than willing to chat, especially knowing that she had the source code to the Mac version of Wolfenstein 3D she had done almost a decade prior. I finally met Becky in person for the first time at GDC 2014, ambushing her after a panel she was on at Moscone North. She fortunately recognized me and we became Facebook friends very shortly after that, instantly bonding over game programming and industry history. At GDC 2016 I offered to buy her dinner at Buca di Beppo; the deal we arranged was that I’d provide the food, and she would provide the entertainment in the form of war stories. 😀 I got to learn the “true Hollywood story” of the Mac versions of not only Wolfenstein 3D, but of the cancelled port of Half-Life. Someday maybe I’ll write a bit about what I learned that night.

I shipped The Journeyman Project: Pegasus Prime on DVD and GoG in late 2013 to coincide with The Journeyman Project’s 30th anniversary. The production of that by itself warrants its own story, but leading up to the fall of 2016 I was putting the finishing touches on a version for Steam that integrated the Steamworks API for achievements, cloud saves, and controller support. It was with the lattermost that I was having trouble; I encountered, verified, and documented—with reliable repro steps—a bug in Steamworks’s controller code… but could not find a way to communicate any of this to Steamworks engineers at Valve! The official way to report bugs, as it was explained to me, was to make a post on Steam’s forums. I did so, but was met with fellow programmers and non-programmers—none of whom from Valve—advising me to follow the same steps outlined in the Steamworks documentation with which I was well familiar. Ugh. No amount of my explaining “no, I found a legitimate bug here” would get me an audience with an engineer who could help upstream a patch.

With myself going nowhere through the “official” channels, I realized: Steam Dev Days is coming up in October 2016. I registered myself and scored an invitation to Valve’s developer conference in Seattle. For the price of round-trip plane tickets, my goal was to track down and corner a Steamworks programmer and show them the bug I was fighting. I managed to do so; after waiting in line for a couple hours (most folks were there to talk about Valve’s forays into virtual reality) it took only 30 seconds to demonstrate and explain the problem to the engineer, and sure enough, two weeks later the release notes of the latest version of the Steam client indicated my bug was fixed. ❤️

Becky and Jennell were living in a high-rise apartment in the Belltown neighborhood of Seattle. They graciously offered to let me stay with them, which was great because my visit was pretty low-budget anyway. That evening, I arrived at their apartment complex, but because Becky was out running an errand, nobody was there to meet me. The neighborhood was perhaps not the safest in town; not that I was worried for myself, but I preferred being indoors at that time of the night. The complex wouldn’t let me in without somebody to come get me, so Jennell came down to greet me, and it was here that I met Jennell for the first time. We talked for hours about her time at id Software, and stories—with varying degrees of “juiciness”—about her experiences there.

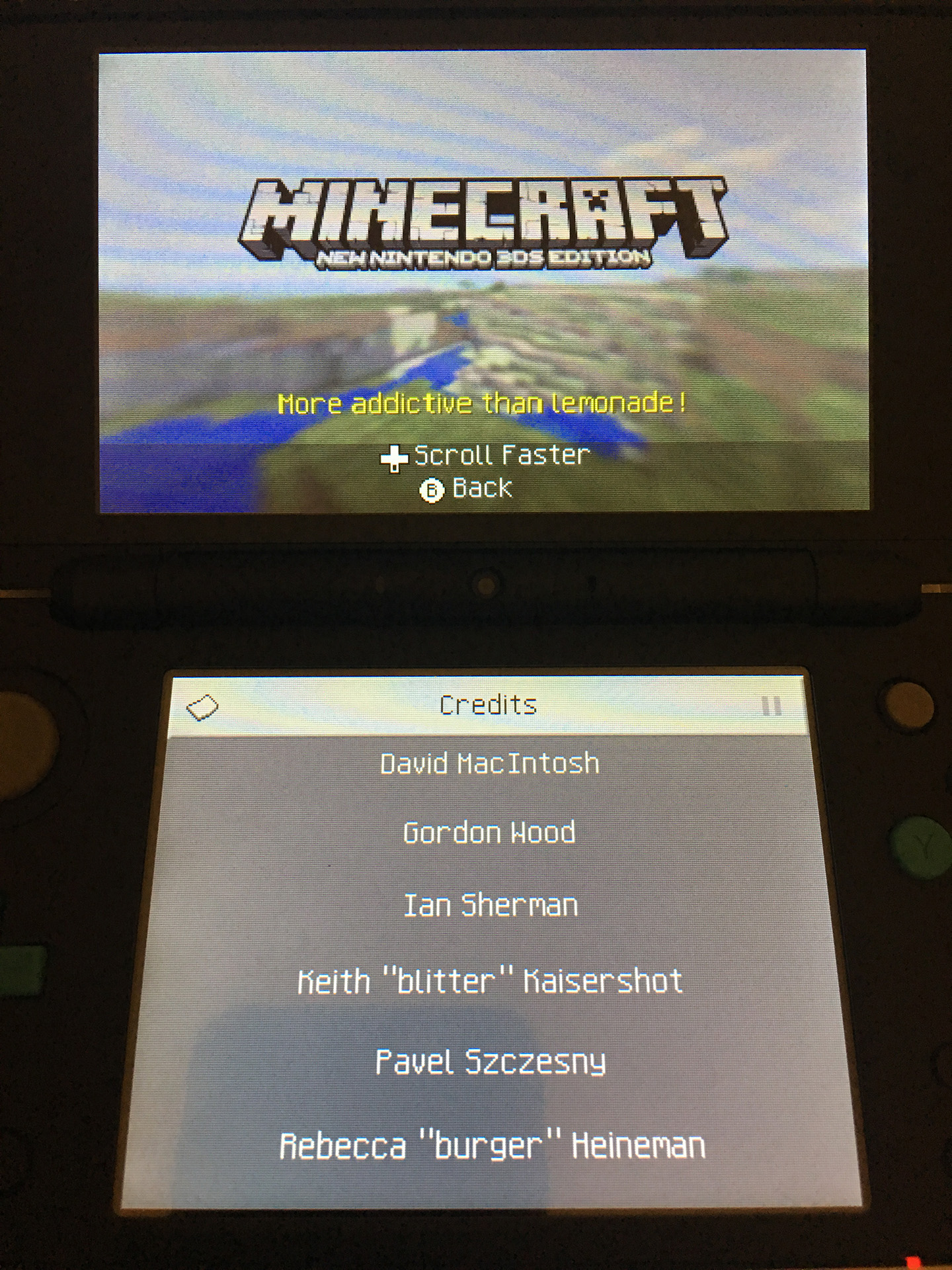

By May 2017, Becky was in need of contract work. After all the coding wisdom she had imparted on me over the years, I finally got a chance to return the favor by helping to get Becky hired as a short-term contractor working with me on Minecraft: New Nintendo 3DS Edition. We pair programmed over Skype to help get its UI over the finish line; she up in Seattle, me down in Emeryville. In between debugging new builds, we brainstormed a lot about what we each wanted to do with the Wolfenstein 3D franchise if Becky ever got the opportunity to revisit it. 🤔

Becky and Jennell moved down to El Cerrito in 2018. I loved this; it meant they lived close enough to me that I could hang out with them a lot more often, which I did. I would often deliver them food, watch their place, and grab any delivered packages while they were away. Becky likewise came down to my housewarming in 2018.

Their archives took up their entire two-car garage, but Becky and Jennell spent most of their time in the front room both doing what they loved most: making games and caring for their cats. Jennell also liked to make and paint miniatures. Years ago, at SVVR 2016, I had myself 3D scanned in a couple of different poses. I sent Jennell one of the files and, on one visit, was delighted to find a tiny painted 3D-printed miniature of myself in her collection, done up to resemble a zombie.

I drove Becky and Jennell up to Portland for the October 2018 Portland Retro Gaming Expo in my 2007 Mitsubishi Eclipse. They in turn let me stay with them in their room at the Crowne Plaza downtown. We went to Prime Rib + Chocolate Cake for dinner (RIP), and the next day I joined Becky with David Crane, Garry Kitchen, and other Activision alumni for lunch at the Red Robin across from the convention center.

Becky bought herself a boxed LaserDisc player at the show, and sat with it in the back seat of my Eclipse the whole ride home. Poor Jennell rode in the front seat and was uncomfortable most of the ride, owing to her stature, though she opted to recline as much as she could for as long as she could during the return trip.

In 2020, Becky and Jennell moved to the Dallas area into the home that Jennell had designed during her time at id Software. Becky would appear at several QuakeCons after that and we’d often hang out; I’d always save her a spot next to me in the BYOC, and she got to meet several members of my Quake clan as well as catch up with friends of hers connected to id, Microsoft, and the 90s gaming scene. One QuakeCon in particular stands out to me: in 2023, I made a point to get lunch with Becky and Jennell at the nearest Torchy’s Tacos. That turned out to be the last time I’d ever see Jennell; she sadly passed away in January 2024. Becky and I continued to hang out whenever I was in Dallas, usually preferring Torchy’s again in memory of that first lunch with her and Jennell. She would also occasionally host her Burgertime streams where I participated in the chat, and when gossip got “too hot for TV” we’d talk on the phone every now and again.

Becky even made a quick cameo (@ 7:54) in a video with me, Patrick Martin, and Alex Mejia

Like most folks close to her, I learned of her cancer diagnosis via her social media announcement. In my last phone call with her, I asked how she was feeling and expressed how confident I was that she’d beat it given how prompt she was with her discovery and medical attention. She continued to have an upbeat attitude and sense of humor in spite of two rounds of chemotherapy and the ultimately unfortunate prognosis. I was in midtown Manhattan when she shared the news from her doctors that she was unlikely to make it through the rest of the following week. She and I had a brief exchange on Discord that Sunday night, but I had a gut feeling that I should try to see her as soon as possible, so upon arriving home early Monday morning I got on the first flight to Dallas.

I made it to the hospital just in time to see Becky and say goodbye. Along with several members of her family, I was with her two hours later when she passed that Monday afternoon.

As I’m writing this, it still hasn’t quite hit me that she’s gone. Her work endures, and there is so much that she created and put out into the world that memories of her will linger probably well after I have left this earth. The late-night Discord chats, the GDC dinners and meet-and-greets, the random shenanigans, encounters at nerdy conventions… there will always be an empty spot—both physically and metaphysically—in these places from now on. I owe a lot of my career in games to Burger Becky, and with her passing, the world has suffered a great loss. But for all that the loss of Becky has taken from us, she has put so much back into the world. Over the past month I’ve met a number of her friends—both industry and not—who through very personal stories have corroborated just how generous of a person she was. I used to selfishly think I was her protégé, but she was a mentor to so many more people both in their careers and in life. I will miss her greatly, but as long as her memories persist and I pay forward her generosity, she’ll always be with me in some form. I hope that in this way I can introduce new friends to Becky who weren’t as lucky to have met her in real life.

Rest in power, Burger Becky. Narf! ❤️